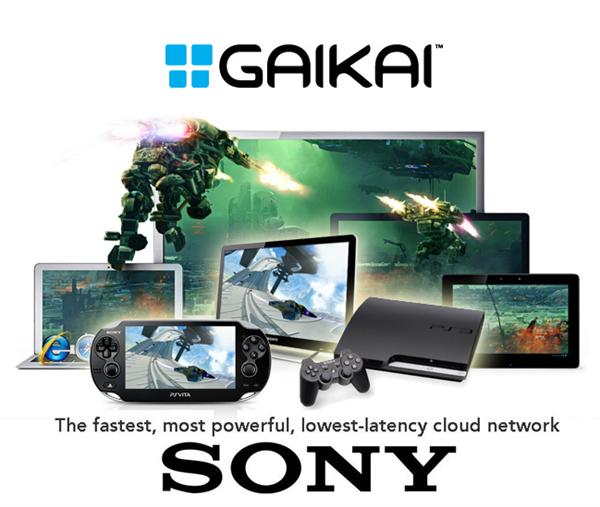

I was interested to hear about Sony’s plans for the future of gaming:

Turns out that they’ve bought Gaikai, a company specialising in rendering games in a data centre, streaming the results back to you. All we do is to transmit your gamepad’s directions.

Therefore there’s nothing to install locally, no updates or disks to deal with – and more importantly we don’t need super high-tech hardware at home that needs to be upgraded every 3-5 years. Technology upgrades happen in the data centre, and all we pay for is access to the game itself.

Sony say that they want to bring this service to the Playstation 4 (it’s currently in beta), Playstation 3 as well as Bravia TV sets. This could mean a massive back catalogue of 10 year old games from the PSOne and PS2 era, as well as top titles from PSP, PS Vita, PS3 and PS4.

It’s right up there with “cloud based office” solutions like Office 365 and the iWork suite – not to mention Dropbox, Flickr, Vimeo and whatever else we use as an external hard disk replacement. I like the cloud movement, it means I will have to visit the Computer Fixperts data retrieval much less, I tend to have butter fingers with my fragile tech.

Looking back over the beginnings of computers in the sixties and early seventies, we’re now experiencing exactly what had been commonplace back then: computer time sharing.

The Sixties

Back in the days, computers were to large and expensive that there was no way the likes of you and I could have one at home. But universities and companies had them in something like a control room, with terminals from various other rooms to access the computer. That’s why Linux is such a capable multi user environment: one machine, lots of users logging in submitting jobs to The Machine.

At first those terminals were local and connected via a thick heavy cable to The Machine in the same building. Later you could have a terminal at home and dial in via a local (free) phone call and use the computer. Terminals were small keyboard type things with a monitor and literally no computing power.

Then in the mid to late seventies the MOS 6502 processor came out and started the home computer revolution. Over the next few decades the likes of you and I bought computers and ran them at home, and it didn’t take long for technology to become so cheap and ubiquitous that our machines at home (and in our pockets) were better than what was sitting in those custom data centres. Those were the nineties and naughties.

Virtual Machines

Remote computers are great for “always on” services such as websites and emails – so you can rent a full computer in a data centre and manage it yourself if you like. Over the last decade or so it became more economical to maximise hardware capacity by creating virtual instances.

Those are “units” that emulate a full machine and react just the same, but in reality they’re just containers running on larger clusters of hardware. Rather than a CPU sitting idle 99% of the time, my own idle time could be put to good use elsewhere and “pretend” to be someone else’s fully fledged machine. The added benefit is that if one physical machine in the cluster crashes, the others can buffer the mistake until it’s fixed (much like a hard disk stripe).

Meanwhile, on your desktop

We’ve reached the point in the home computer revolution where a faster processor, a shinier display with more colour depth or more RAM aren’t going to make a difference anymore. Neither do faster data lines to the outside world. We have all that and more.

We’re at the end of what the MOS 6502 started in the seventies. Your desktop can no longer be made any better than it already is. It’s an interesting thought to recognise this.

Which leaves the question: what’s going to happen next?

Sure, we can shift everything into The Cloud (THE shittest description for this phenomenon bar none) and access the same services we already have with slower machines and inferior hardware. Those could be good at other things: they can be small and battery powered or cheaper, like our smart phones and tablets – yet they would appear as powerful as a fully fledged laptop, because computing is done in The Cloud. Amazon’s Silk web browser in the Kindle Fire is a good example: with relatively slow hardware, it pre-renders web pages in their data centre and is supposed to deliver a better user experience.

So what’s next?

Just like back in the early eighties when ordinary humans laid their hands on the first home computers – all we can think of doing with “The Cloud” is to replicate what we can already do – without The Cloud. That’s not innovation though is it?

That’s why I think of Playstation Now as such a cool idea: have real time graphics render off site and see the results – we’ve not seen this before.

I remember when the iPad first came out, and we all thought “this is great for emails and web browsing”, but we could do that already on laptops. Shortly after it became a revolution, all these innovative apps started coming out which turned the iPad into something else, changing our lives. Ray was saying back then, “Currently the iPad is a placeholder” – meaning society hasn’t decided where this is going just yet.

Perhaps it’s the same with The Cloud. It takes another decade to really appreciate where this is going, what the next real innovation is (it’s not 3D or 4k TV by the way).

Personally I’d like to see a “less is more” approach. What’s happening online is quickly becoming more important to society than what’s actually around us. We need to get out more and care less about who’s writing what on The Internet, regardless if it’s some website or some social network. We have other senses that need to be fed too.

I hope both current and future generations (me included) will be able to remember that there are things other than The Cloud, and there are other places in our world than Online.