I’ve made an interesting discovery the other day about one of my render nodes: with identical GPUs, one appears to render faster than the other. I didn’t get it at first. But with a possible explanation in my head, I got the thinking and applied the same principle to my other node, and was able to increase its render speed by 24%!

How exciting is that?

It’s all about retro hardware, and how to make the most out of what you already have. Let me tell you what I discovered, and how I made use of an old AMD/ATI GPU in my setup that I never thought would work.

The Discovery

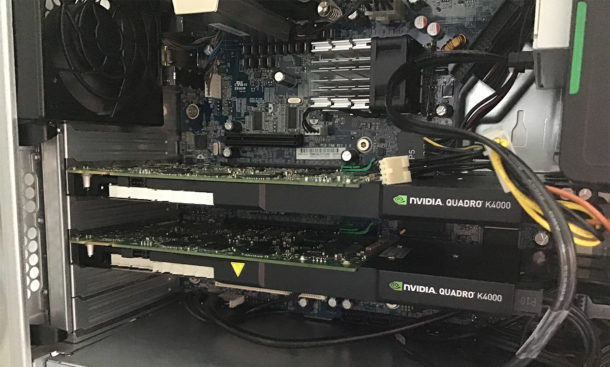

One of my render nodes is a HP Z600 dual Xeon setup with two NVIDIA K4000 cards. I’ve only recently added the second one, up until then it was a single K4000 node. Together with 24 CPU threads, it packs a good punch – even though the system is getting on for 10 years now.

I’m using my nodes for Blender and DAZ Studio renders through RDP or NoMachine, so there’s no monitor connected to either of them. Blender has an issue with using two GPUs if the scene is larger than what fits into their memory (only during animations), so on my K4000 node, I have to switch one card off so that Blender doesn’t crash on the third image. I can live with that.

Since both GPUs are identical in that node, it stands to reason that it doesn’t matter which one I switch off, and which one I use for rendering. So I kept varying it just for fun, and noticed something remarkable: there does seem to be an impact on render speeds, depending on which card is selected.

The second card renders faster, up to 50% faster in fact! An average frame of my animation takes the node 12 minutes to render with the first card, and just over 6 with the second card.

It’s a mystery!

Assuming that the CPU’s speed doesn’t significantly change (unless Windows is doing some “really important” in the background), it either means that the first GPU is somehow broken or underclocked, or it is otherwise engaged with things that it needs to do. And what could that be?

Well rendering the actual display of course!

I hadn’t thought of that! Even without a monitor connected, something needs to generate the picture I see though my RDP or NoMachine connection. I’m guessing that the first card is doing that, and if it were to render Blender things on top of that, well there’s only so much oomph it has to make that happen. I understand that. It makes perfect sense.

So in conclusion, if I have to disable one of the cards, it’ll be the first one.

The Implications for The Future

I went to bed, having kicked off a render on all my computers, when I was thinking of my other render node. It’s also a Z600 with a dual Xeon CPU, and a single GTX 970 GPU. Surely it too would be faster if it had a second GPU to render the display, which would leave it free to render Blender and/or DAZ images without having to display the image I’m looking at.

Eureka, I thought: I have two old AMD cards on my shelf, collecting dust! I don’t know their exact model numbers, or how old and (un-)capable they are, but they should be able to display a picture at least. Questions raced through my mind: Would an AMD card work in addition to an NVIDIA card in the same machine? How would I be able to tell Windows which card to use for the display, and which one for rendering? Why didn’t I think of this before? Why do I have to go to bed when there’s important work to be done?

Experiments had to wait until the morning (this morning in fact), and I’m very happy to report that my theory does indeed work in practice!

How to make it happen

The cards I had were from the original Z systems I bought off eBay over the years. One came in a Z600, the other one came with my Z800 I believe. I never really gave them a second look, but I also didn’t want to through them out. You never know when you need a no-frills graphics card to get a picture out of a computer.

One card I had on the shelf was the AMD Radeon HD 2400, also known as the B170. The latter is the only reference on the card itself, and thankfully Google brought up the information. Thank you, Google! It’s a card from 2008 that only has 256MB of DDR2 RAM. I don’t know much about it, other than what Technpowerup tell me about its specs. Mine doesn’t have the fan though, it has a heat sink instead – that’s never a good start. You can see a picture of it at the top of this article.

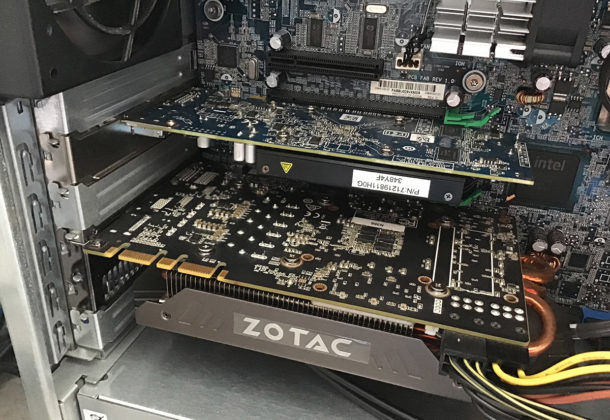

The other card was an AMD Radeon HD 8490, also known as the C367. It was made in 2013 and comes with a good 1GB of DDR3 RAM. I didn’t get a chance to snap a picture, it’s already working hard in the Z600 as I type. Here are the full specs though. Since it’s a newer and much better card, I felt it was a good candidate to take up display duties in my other Z600 render node.

How would I tell Windows though which card to use for the Display?

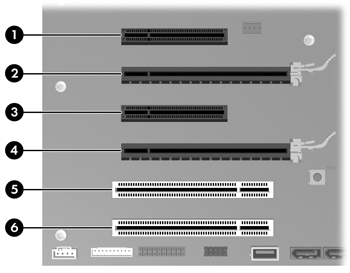

HP suggests that the two main slots are nominated as “primary” and “secondary” graphics card slots, so my first thought was to put the AMD card into slot 1 (or rather 2 according to the illustration), and the NVIDIA card into slot 2 (or rather slot 4). I did that, but Windows still used the NVIDIA card for the display. That’s not what I wanted. The idea was to leave it 100% free for 3D rendering duties.

On a positive note: the AMD card’s driver installed as if by magic, without interfering with the NVIDIA driver, so they both worked well on the same system without me having to do any tinkering. In the task bar I can see both the AMD Catalyst and the NVIDIA Control Panel. That was a good start!

Apparently there’s an option to pick a GPU in Windows though: right click on the desktop, choose Display Settings, then at the bottom there’s an Advanced Display Settings option. This will show which GPU is currently in use and gives options as to which one to use for a specific app when it’s started. That’s not what I wanted either. There’s even a drop down menu that showed my NVIDIA card, but not the AMD card. I couldn’t pick it manually.

So I swapped the cards around, thinking that perhaps them being in physically different positions would do some magic – but it did not. When I restarted Windows, the NVIDIA card was still the main display card.

My last idea was to remove the NVIDIA card altogether, leaving the AMD card as the only graphics adapter in the system. I started Windows, connected with NoMachine, and that made everything work – more or less. The resolution was not a full 1920×1080, instead the card maxed out at 1600×900. That’s totally acceptable for kicking off renders and doing admin work. The card was in the primary Z600 slot, just for my reference.

I added the NVIDIA card into slot 2 (or rather 4), restarted Windows, and… voila – the AMD card was still the master, with the GTX 970 now sitting idly by waiting for things to do. And it worked flawlessly! I started my render in Blender, and with its full potential now being available, my scene rendered 24% faster than before!

- GTX 970 only – 8min 56secs (=100%)

- GTX 970 and Radeon HD 8490 – 6min 51secs (=76%)

Conlusion

It’s good times ahead! If you rely on remote connections and rendering on other remote machines, take a look if an old or a second GPU is an option to be installed. The same principle should work with built-in on-board GPUs like the Intel series. Rather than disabling them completely, put them to work and get some extra rendering power out of the hardware you already have.

I never though this was an option, but I’m glad I’ve discovered this. New hardware isn’t always the answer (even though, often it is). Some TLC for the old silicon chips might yield a hidden speed increase that wasn’t obvious before.

Any questions, please let me know below.

Brilliant…

Thank you, Jim! ?